What is Augmented Reality?

Augmented reality (AR) is a computer-based technology that adds virtual content to the real world environment. Using special AR glasses, smartphones and tablets, three-dimensional models or 2D elements (text, images, audio/video) are superimposed on the real world as additional information. Interaction with digital content can also take place in AR.

Drag the arrow from left to right to overlay the world with virtual content.

The scientific classification of Augmented Reality

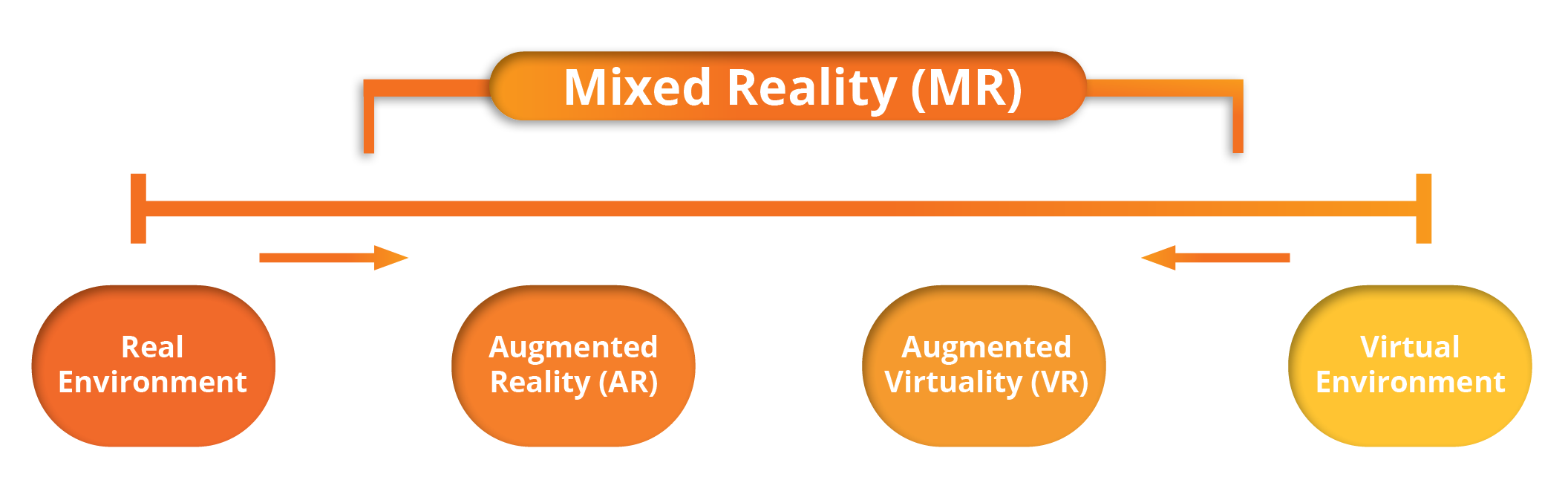

A classification of immersive technologies was already made by Paul Milgram and Fumio Kishino in their 1994 paper “A taxonomy of mixed reality visual displays” in the form of the virtuality continuum. The continuum represents a scale from the real environment (reality, left) to the virtual environment (virtuality, right). The further to the right one moves along the scale, the greater is the proportion of the virtual environment compared to reality.

Along the virtuality continuum, Milgram and Kishino situate the terms augmented reality (AR), augmented virtuality (AV), virtual reality (VR), and mixed reality (MR). Accordingly, AR is a combination of the real and virtual worlds in which the real world predominates. Unlike VR, AR does not create a new world, but supplements the existing real environment with digital content. A relatively new term that is becoming increasingly established in both research and industry is extended reality (XR). XR can be used as an umbrella term for all virtual technologies such as augmented and virtual reality.

How does Augmented Reality work?

Augmented reality superimposes digital information on a natural environment captured by a camera. Each AR system consists of three components: Hardware, Software Toolkit and Software Application.

1) Hardware

Hardware refers to the output devices through which the virtual images are projected. Smartphones, tablets or AR glasses are the most common display devices. A good processor (CPU) and graphics processing unit (GPU) are important to display the digital AR content in a performant way. To visualize complex data, XR Streaming can also be used to outsource computing and graphics power to more powerful local servers or the cloud. You can see the possibilities this opens up here. In addition, the hardware also needs a number of sensors to perceive its environment.

- Depth sensor: to measure depth and distances

- Gyroscope: to detect the angle and position of the output device

- Proximity sensor: to measure how close and far away something is

- Accelerometer: To detect changes in speed, motion and rotation

- Light sensor: To measure light intensity and brightness

2) Software Toolkit

The second component in an AR system is an open source development kit. Examples include Microsoft’s Mixed Reality Toolkit for HoloLens, as well as Google’s ARCore for Android and Apple’s ARKit for iOS devices. All three platforms are designed to leverage 3D AR capabilities of devices. They include methods to place and interact with holograms.

- Environment understanding: this allows the AR device to recognize salient points and flat surfaces to map the environment. In this way, the system can then place virtual objects precisely on these surfaces.

- Motion tracking: This allows the AR device to determine its position relative to its environment. Virtual objects can then be placed at designated locations in the image.

- Light estimation: This gives the device the ability to perceive the current lighting conditions of the environment. Virtual objects can then be placed in the same lighting conditions to increase realism.

Toolkit Examples:

3) Software Application

The last component of an AR system is the application or AR app itself. It has AR functions such as visualizing 3D objects and provides a user interface. Only with this it is possible to work on holograms or to collaborate with several users. One application example is the Augmented Reality Engineering Software AR 3S.

AR 3S – The Augmented Reality Engineering Space

With the AR Engineering App AR 3S, engineers and industrial designers can visualize and collaborate on CAD data in an augmented reality environment. Industrial workflows such as prototyping, factory planning, quality control and technical training are thus raised to a new level of efficiency.

How is AR applied?

Augmented reality applications can be categorized in the areas of collaboration, product development, assistance, learning and navigation/tracking. However, it should also be noted that the technology is constantly evolving and new AR use cases are being developed for the industry.

Collaboration

Collaboration applications focus on shared experience and development. Here, AR enables an uncomplicated, fast and, above all, smooth experience of 3D models in a collaborative space. This means that all participants can come together for a virtual meeting regardless of location – even across large geographical distances – and interact with 3D objects together.

Assistance

Applications in the field of assistance provide people with additional information to enable them to complete tasks without errors. Whether the assistance comes from the machine or from a remote colleague is irrelevant. In the case of the remote expert, for example, an employee wears a smart glass and an expert at another location can see what he sees. The expert can then give real-time instructions to the worker on site. In another scenario, an employee wears an AR smartphone and the technology overlays data, instructions or notes over the real objects.

Learning

In today’s working environments, people need to learn something new all the time. Augmented reality can intuitively convey knowledge, for example, using step-by-step information. And the consequences of incorrect actions can also be simulated. When training with virtual objects, the transfer of learning is great and what is learned is better remembered. With augmented reality, training content and learning enjoyment can be combined in a playful way. The AR Sandbox is an example of how students can learn the basics of geomorphological shaping.

In augmented reality, the “digital twin” of a product can be virtually represented in all its facets or in parts. In the product development phase, designs and prototypes can thus be quickly evaluated and also overlaid with real objects. In machine and prototype construction as well as in factory planning, design reviews with augmented reality help to realize developments economically.

Tracking/Navigation

Tracking is the determination of time-varying parameters, usually the position of users and real objects. For example, any manufacturing facility has a large amount of objects such as machines and tools. Augmented reality allows us to visualize collected position data in real time on site. The user gets navigation instructions, machine data, tool positions etc. displayed on his AR glasses. Machines and equipment can thus be used more effectively and employees can be guided safely and quickly through industrial environments. The prerequisite for this is the correct registration between real and virtual objects. AR QR codes or sophisticated positioning standards such as omlox can be used here as markers or connected with AR applications.

What are the areas of application for Augmented Reality?

The variety of application areas for AR is enormous. The central question here should be which problems are to be solved specifically with augmented reality. A short overview should show for which industries the technology is particularly interesting in each case.

Why is AR the future?

In 2021, augmented reality has already established itself at numerous companies as a future-proof technology and an important component of their digitization strategies. In many cases, the technology is currently at a point where the leap from the project level to the level of productive use has occurred or must occur immediately. The breaking down of geographical distances and the networking of people as part of global digitization are changing the way both companies and private individuals work and live together.

The augmented reality industry has established itself as a key driver of digitization in recent years. Information must be displayed where it is needed. The development is moving away from stationary computers to mobile devices such as smartphones and smart glasses. Tech giants like Apple, Microsoft, Google and Facebook have long recognized that the future of data consumption lies in augmented realities, and so more and more data glasses are appearing for consumers.

The merging of the real and digital world is permanently changing the way we consume information, data and content. Three-dimensional data is playing an increasingly important role. Advancements in 5G, cloud and edge computing provide the infrastructural foundation for augmented reality to advance beyond the enterprise realm. Computing power from the cloud and the streaming of large amounts of data also enable the optimization of the form factor of data glasses. With all these developments, it remains to be said that augmented reality is an indispensable part of our new present.

XR Streaming with ISAR SDK

Boost the performance of your XR devices and get the most out of your 3D content. Thanks to unlimited performance, users can, for example, view highly detailed renderings with computationally intensive shader effects.

What AR devices are available?

The market for AR hardware is currently still dominated by a few main players. The VR hardware market with many virtual reality companies is basically larger than the AR hardware market. Augmented reality, however, has higher potential in the B2B enterprise sector. The trend is toward mobile hardware. Wired XR headsets are going wireless and handhelds such as smartphones and tablets are coming to the fore.

Important criteria for the further rollout for AR hardware are industry acceptance (MDM integration, certification), an improved user experience (interaction, comfort), and financial viability. As of June 2021, it’s worth taking a look at AR devices HoloLens 2, HoloLens 2 Industrial Edition, Trimble XR 10, Magic Leap One, Nreal Light, ThinkReality A3, Vuzix M4000 and Realwear MT-1.

How do I program AR apps?

The tools and skills needed to develop augmented reality apps depend on a number of factors. For example, the choice of hardware influences the development environment. One commonality of AR apps is that they are created in game engines. The leading providers are Unity and Unreal Engine. Some XR devices support multiple game engines, while some are only compatible with a single game engine, so considerations regarding the choice of game engine must be made.

Depending on the tool, developers may need to master different programming languages such as C# or C++. In addition, development environments such as Visual Studio are used. Some data glasses also offer tools and SDKs that facilitate app development, such as Microsoft’s Mixed Reality Toolkit (MRTK). Software development kits (SDKs), application programming interfaces (APIs), and cloud services often provide additional features and can be incorporated into augmented reality apps. In the area of AR reality app development on smartphones and tablets, the two major platforms are ARKit from Apple and ARCore from Google. In addition, there are a number of other platforms such as Wikitude and web-based augmented reality applications (Web AR) that can be used via a browser.

The push for standards such as OpenVR or OpenXR should curb the fragmentation of the XR market and facilitate development, especially across different platforms. Those who want to create augmented reality applications without programming skills can turn to one of the numerous content creation platforms.

What is the AR Cloud?

The AR Cloud is a persistent digital 3D representation of the real world. As a ubiquitous data platform, it is used to store and share information to enable shared AR experiences by multiple users and devices. The 3D virtual objects remain placed in their original position and relate to the physical environment. The process, in which a virtual world is built into the actual visible world, has been well known – at least since Pokemon Go.

In technical terms, the AR Cloud is a machine-readable model of the world on a scale of 1:1 that is continuously updated in real time. It is a collection of billions of machine-readable data sets, point clouds, and descriptors that are aligned with the coordinates of the real world. Similar to a digital twin of the world, the goal is to map and document information so that it can be used by AR or even VR applications for a better and richer experience. In order to enable these experiences in a performant way, the streaming of 3D data plays a major role. Examples for the application of the AR Cloud could be location-based AR advertising content or information on places of interest. One initiative working on the realization of this concept is the Open AR Cloud. In addition, there are also closed systems from individual manufacturers such as PTC, 8th Wall, Niantic, Microsoft, Google, Apple and Facebook.